AI doesn’t know you exist.

Let’s fix that.

PromptSeed is the Generative Engine Optimization (GEO) toolkit that gets your brand mentioned in AI answers—ChatGPT, Claude, and Gemini. Simulate prompts, analyze mentions, audit pages, and ship content models can quote.

- 0

- prompts simulated

- 0%

- avg lift in answer‑share

- 0+

- pages audited

- 0.0M

- mentions checked

The problem

AI answers are the new front page. If you’re not there, you’re invisible. Models summarize, not link — they don’t need to cite you.

- Missing from model answers

- Pages optimized for Google, not models

- No visibility into credited sources

The fix

PromptSeed gives you a repeatable GEO workflow — simulate prompts, analyze mentions, and audit pages to earn citations.

- Simulate across ChatGPT/Claude/Gemini

- Analyze mentions & sentiment

- Audit for direct quotability

Try the Prompt Simulator

Run your prompt across engines, compare tone variants, and export a decision log. See how often your brand (and competitors) are referenced.

- Behavior & hallucination summaries

- Fixed engine order + tone tabs

- Save, compare, and export runs

- Rate limits tied to plan tiers

60-sec walkthrough · sample data

What you get

GEO tools that turn invisible brands into go-to sources inside AI answers.

Prompt Simulator

Compare engines, tones, and prompts side-by-side with exportable logs.

Mention Extractor

Track brand mentions, sentiment, and competitors across runs.

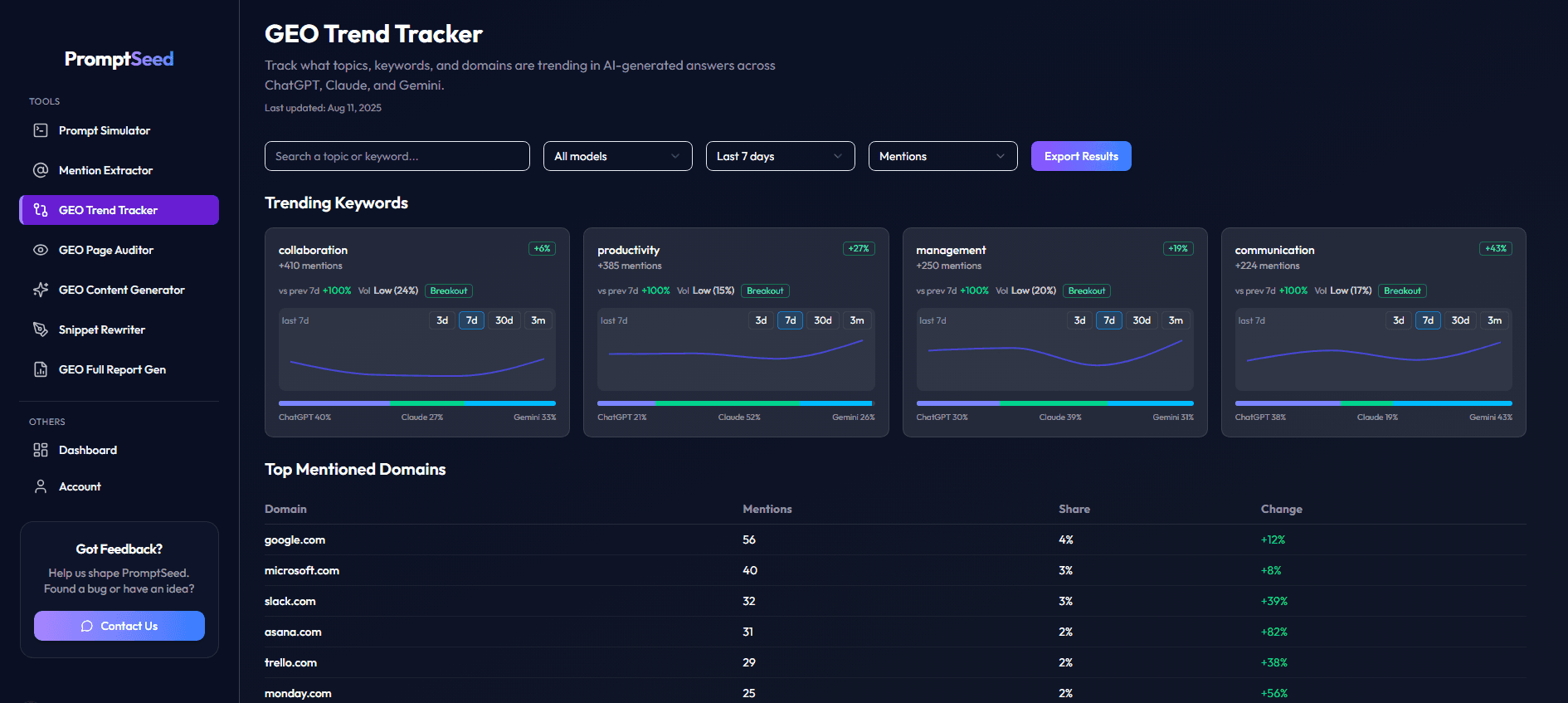

GEO Trend Tracker

See what topics engines favor this week and where to earn mentions.

GEO Page Auditor

Audit pages for answer-readiness, schema, and quotability.

GEO Content Generator

Ship content that models can cite—HTML + JSON-LD ready.

Full GEO Report

One export combining Simulator, Extractor, Auditor, and Trends into a single shareable report (PDF/CSV).

Simple, transparent pricing

Start free, upgrade anytime. Annual saves 25%.

“We got our brand cited in Gemini answers after two weeks of using the auditor templates.”

“The simulator made our prompts consistent across the team—huge time saver.”

“Loved the exportable logs for client reporting—felt like real QA for LLMs.”